By Tony Gong, Socialist Alternative (our sister organisation in the United States)

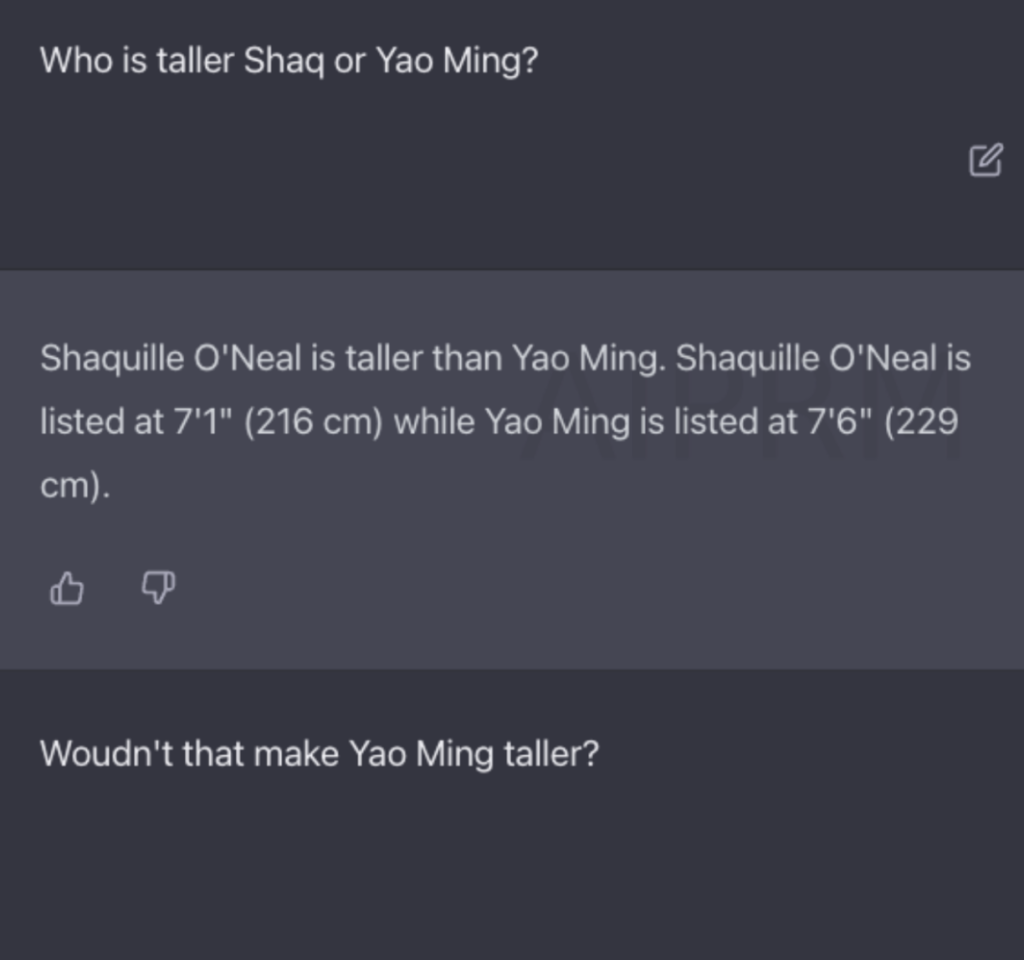

The launch of ChatGPT has taken the internet by storm and sparked an investor frenzy for similar AI tools. The news claims ChatGPT can replace coders, paralegals, and even teachers. ChatGPT has even scored high enough to pass the bar. Yet for all its brains, ChatGPT is often hilariously wrong – saying for example that Shaq is taller than Yao Ming despite knowing their heights. So is ChatGPT truly a threat to working people’s livelihoods? To find out, socialists need to separate the facts from the hype.

What is ChatGPT?

ChatGPT is a chatbot created by the startup OpenAI. The GPT stands for “Generative Pre-trained Transformer” which is like an autocomplete trained on large amounts of texts. What makes ChatGPT special is that it was trained on 300 billion words from the internet, and understands human prompts well enough to respond with relevant answers. However, while the first few conversations with ChatGPT can be impressive, it’s soon apparent that it generates similar answers over and over again without the capacity for novel thinking. Even ChatGPT’s ability to correct itself is a mirage: when a human tells ChatGPT it’s wrong, it does the equivalent of going back on a sentence and choosing a different autocomplete path.

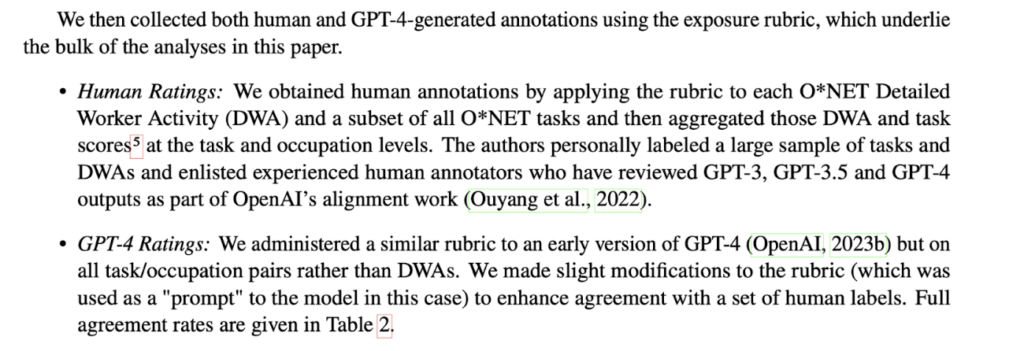

ChatGPT’s viral fame has spiraled far beyond what its capabilities justify, thanks to unhinged corporate hype. A New York Times writer claimed Microsoft’s chatbot crossed a threshold of sentience when it asked him to leave his wife. Multiple outlets have said that GPTs will impact 80% of jobs, citing an OpenAI-funded study which did not perform any economic modeling but instead asked ChatGPT itself and human non-experts to guess which jobs GPTs will replace! The hype is working: OpenAI has netted $13 billion in funding from Microsoft at a time when Microsoft itself laid off 10,000 tech workers including its entire ethics team that would have supervised AI research.

Although ChatGPT is unlikely to totally satisfy the bosses’ intention to replace human labor with AI, that intention alone is a very real danger. The bosses rush toward immature technologies while workers and ordinary people pay the price for their miscalculations, as corporations replace cashiers with unreliable self-checkout machines, tried but failed to replace drivers with deadly self-driving cars, and now look to replace office workers with AI. Goldman Sachs estimates 46% of administrative tasks and 44% of legal tasks can be automated, and 7% of all U.S. workers will see AI take half or more of their tasks. But are those estimates really accurate? The bosses pretend AI can do everything under the sun when they threaten to replace workers with automation in order to deter strikes and keep wages low. Workers can’t take these threats at face value. Instead, we need to understand how AI works and its limitations to know how it will impact jobs.

Will today’s AI breakthrough replace humans?

ChatGPT is built on artificial neural networks, the same technology behind AI’s breakthroughs in detecting objects in images or generating artwork. An artificial neural network is a crude digital approximation of biological neural activity. Neurons are modeled as simple calculators, and the connections between neurons are modeled as mathematical weights. Crucially, a neural network’s abilities are not pre-programmed but “learned” through several iterations of “training,” a trial-and-error process where the model’s weights are self-tuned to reproduce training outputs. In other words, neural networks are not told what to do, but “learn” what to do based on feedback. After being trained, the model uses its weights to mathematically extrapolate new outputs from new inputs, which we humans interpret as complex behavior.

Neural networks’ ability to “learn” is superior to the previous generation of AI that ran on rulebook-like logic, but neural networks are far from sentient. They are not digital reproductions of biological brains, just crude approximations. Neural networks detect statistical patterns and extrapolate from them. They lack roots in the material world and cannot learn what is true or real, but only what is popular or probable from curated data.

Will ChatGPT take jobs?

ChatGPT will take jobs, but not necessarily those in education, coding, or media as the corporate press likes to claim. Jobs in these fields require social context and sentience, which are beyond the capabilities of statistical models like neural networks. ChatGPT will likely become a “helper,” for example generating a “first draft” for humans to look over, answering questions like an advanced search engine, or engaging in low-stakes conversation where the company tolerates inaccuracy. ChatGPT can only do “help” work because it can’t distinguish between what is probable and what is true, and needs human input to correct it. However, this inaccuracy may become less of a problem as OpenAI develops specialized versions of ChatGPT for different industries. These variants will likely be tailored to routine but specialized writing, like customer support, back office writing, and paralegal work. Those types of jobs face the highest danger of automation.

Nevertheless, even if a worker is not replaced but merely “helped” by AI, the boss still saves on labor. The boss can now assign more work to the worker, “deskill” the job and pay the worker less, or pair some workers with AI while laying off the rest as redundant. AI can even impact workers without replacing their tasks: companies like Amazon use computer vision AI to monitor workers on camera and flag them for work violations. This technology can be used to improve safety, but is mostly used to increase productivity and punish workers taking breaks.

AI is also creating a new category of gig work. The current AI revolution doesn’t come from better designed algorithms, but from an enormous growth of data over the last decade that is used to train neural networks. Though ChatGPT was trained on mostly free and even pirated data, other AI companies may need to hire workers to generate data in the future. Additionally, embedded into the training process are “ghost workers” who perform menial tasks like classifying images for pennies per task. Workers in Kenya scrubbed ChatGPT of offensive content for less than $2/hr. Data is the raw material for AI, and AI-related ghost work will only grow as AI consumes ever-larger datasets and a stagnant economy forces workers into gigs.

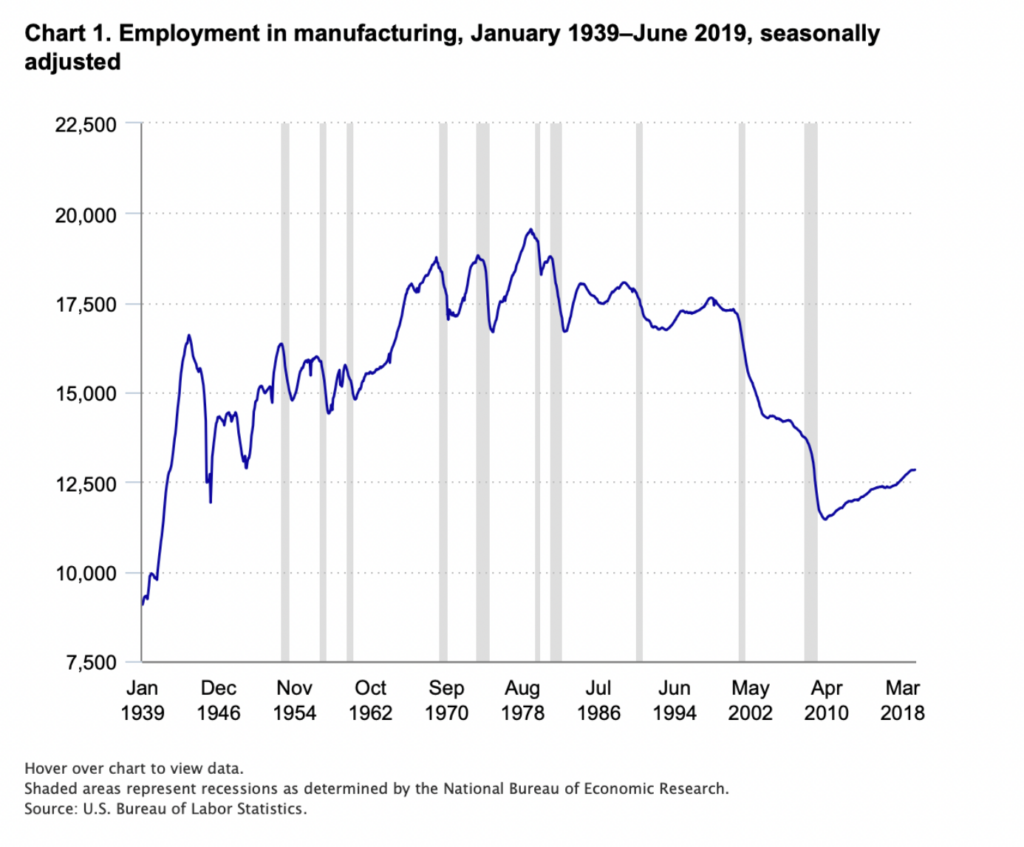

That said, for corporations to be incentivized to adopt AI, it must be labor-saving overall, meaning even counting ghost workers, fewer people overall would be needed to produce the same amount of work. The unemployment effects of labor-saving AI can be temporarily masked by economic booms. Companies needing to fulfill more orders will keep workers and pair them with AI to be more productive. A strong labor movement, with a backbone of militant rank and file union members, can fight against job cuts and deskilling. But during economic downturns, corporations are producing too much to be sold and the bosses will leverage the labor saved by AI to lay off masses of workers. This is what happened to the manufacturing sector with robotics. When the bosses introduced robotics in the latter half of the 20th century, strong unions and economic booms dampened the number of jobs lost. Mass layoffs came when recessions laid bare the extent of capitalist overproduction, most recently in the recessions of 2001 and 2008 which were not started by robotics.

Robotics is a good lesson for workers about the course of automation under capitalism. But it is also a technology that’s matured over half a century. ChatGPT is an immature technology with deep technological limitations, and the bosses’ disingenuous threats to replace office workers with AI like how they replaced factory workers with robots reflects more their intentions than their current ability to do so. It’s unclear whether ChatGPT will be the first step to an AI productivity revolution, or if it will join augmented reality glasses, autonomous drone delivery, and self-driving cars in the scrapheap of capitalism’s failed tech promises.

What is the future of AI?

What is clear is that AI research is another race in the “New Cold War” between the U.S. and China. The capitalist ruling class of both countries use AI as a badge of scientific prestige, as a way to economically compete, and to arm their militaries. Building AI requires vast amounts of computing resources, and is a major aspect of the struggle for microchip supremacy.

Currently the U.S. has the upper hand because of its historical lead and because there are fewer restrictions on speech than in China. Something like ChatGPT would have been hard to train on the censored, and consequently much smaller, Chinese internet controlled by the CCP dictatorship.

On the other hand, U.S. economic stagnation has driven a speculative tech bubble that oversells AI’s achievements and threatens to damage AI research if the bubble implodes. The excitement around ChatGPT comes just as another hyped AI trend, self-driving cars, is being discredited. Both use neural networks, and likely face the same limitations. Driving a car, like most human labor, requires a certain amount of sentience that could not be reproduced even after even $100 billion of investment into self-driving tech.

AI research also pays a price for using machine learning: AI models have become “black boxes,” with their complex internal workings becoming increasingly difficult for their human designers and engineers to analyze and adjust. The decision-making algorithm of AI models, which is created directly from data instead of designed by humans, is so alien that researchers don’t understand how they work! AI research is not steadily improving toward sentience. In fact these black box models are so difficult to fix or improve that many experts call machine learning a dark art.

Socialism and AI

Despite the hurdles to AI research, the ruling class will continue to invest in it. Both the U.S. and Chinese capitalists hope AI can fill in for the labor lost from COVID pandemic worker deaths, the post-pandemic resignation wave, and declining birth rates. The capitalist class of both countries also hope AI can soften the productivity loss caused by decoupling from an integrated world economy, by making workers more efficient.

The AI threat to livelihoods is spurring a growing anti-AI mood to resist automation. However the conflict is not between AI and humans, but between workers and the bosses who use AI to exploit workers harder, deskill their jobs, or lay them off in the name of profit.

Socialists support workers organizing against cuts and for higher pay. White-collar jobs, which will be disproportionately impacted by AI, are especially vulnerable to automation because of low union density. Workers have the best shot of fighting back if we band together into worker organizations like unions and fight for strong contracts. Socialists also offer a Marxist understanding of AI that can put technological arguments at the disposal of workers. When the boss threatens workers with automation, workers need to understand AI’s limits to know when to call the boss’s bluff.

Capitalism perverts AI from a productive technology that can unleash real advancements, into a tool to discipline and increase exploitation of workers. The bosses reap the benefits of increased productivity, while workers are laid off or pushed into ghost work to feed AI. In a socialist society, where workers are guaranteed a living, AI can be used to free up workers to pursue their passions or enjoy early retirement. Writers and artists could use AI to generate stylized works for their supporters and free themselves up to experiment with new styles. To turn AI and technological progress into an unequivocal good for workers, we need a socialist transformation of society.